AI Risk Analysis of Incidents

It can take considerable time to fully assess incidents, the risks involved and whether an adequate mitigation or other response has been put in place. Like many other areas of our platform, we now have AI features to assist you in this analysis.

There is a powerful new "AI Risk Analysis" button available for Wiki Managers on any open incident report. Simply click on the incident report (as a Wiki Manager) and you can then click the "AI Risk Analysis" button.

This action helps you determine what policies you have or need in order to ensure that incident report, hazard or concern will not happen again. It requires Enhanced AI to be enabled.

This function will intelligently look through your site to see if there are existing policies that can mitigate the incident or concern. If no such policies are found, it will either suggest a modification to an existing policy (and make the change in draft for you), or create a new policy which you can then edit further. It will then use this information to generate a report explaining the incident report, how it's relevant to the operations of the organization, and how each found policy mitigates any risks it poses.

This can save considerable time in ensuring your organization is properly responding to any incidents, hazards or concerns that are raised.

Keep in mind it is still experimental and any suggestions it provides should be thoroughly reviewed before use.

Viewing the Analysis

Once the analysis is complete, you will be taken to it. If you go to an incident report that previously has an analysis performed, you will see a link like this at the top:

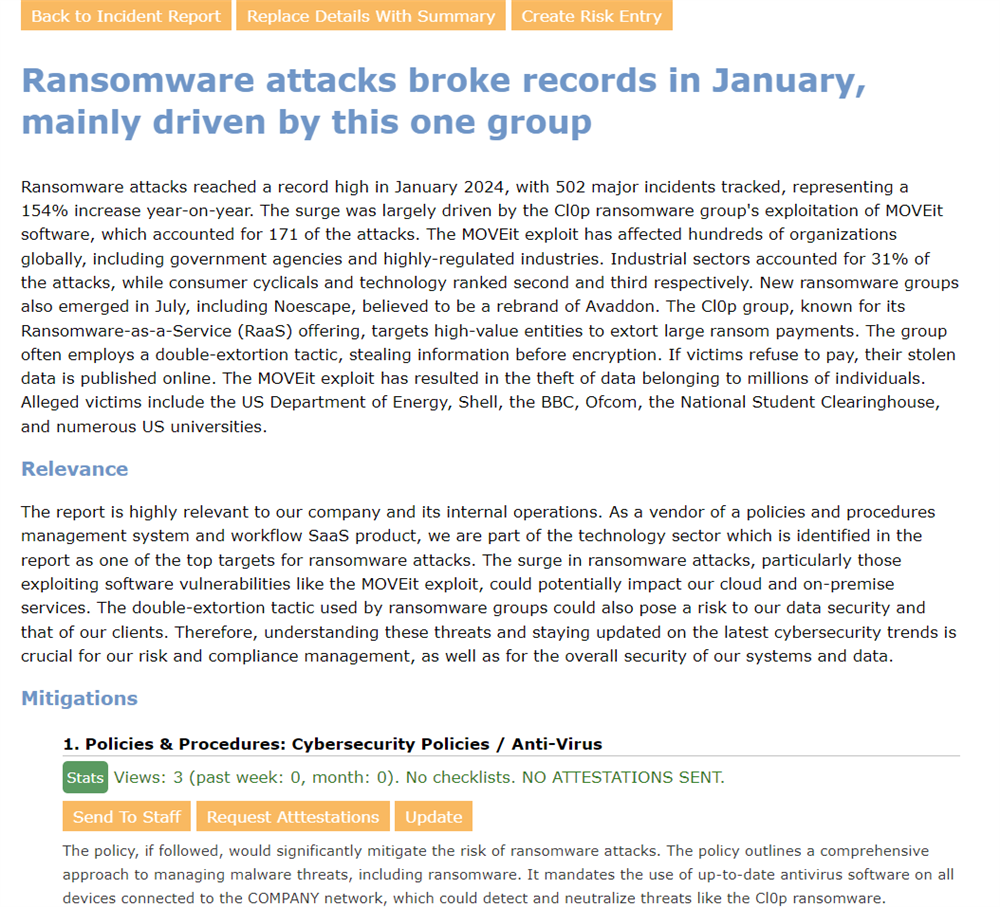

This will also take you to the AI Analysis screen, which will look like this:

At the top is a summary of the incident report. This is generated by the AI and should provide a succinct overview of the text in the incident, hazard or concern.

Below that is an assessment of whether the report is relevant to your organization. This will only appear if the incident type is "Concern", as an incident or hazard will always be considered relevant. The relevancy is also generated by AI and uses the information entered in Configuration / AI Options, in the Company/Product Description section.

Below the relevance section will be a list of mitigations. Each mitigation will have a link to the relevant policy or procedure. Below that will be some statistics that will let you know how that policy has been performing - something which may be key to understanding if the found policy truly is doing its work of mitigating the risks. For example the stats may tell you that no attestations have been sent for that policy.

Below that are some action buttons that let you reinforce the policy. You can simply send a link to the policy to a specific group of users, or you can even request attestations right from that page. You can also choose to update the policy, perhaps if you want to add a quiz for example.

Below the action buttons is an explanation for how the found policy or procedure would mitigate the concern that was raised.

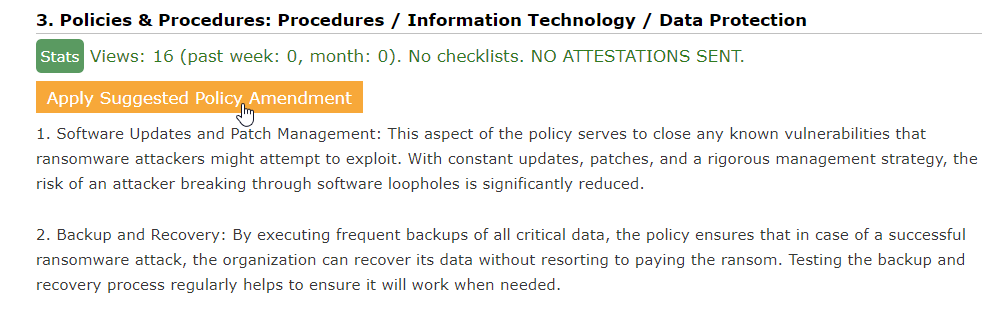

If the mitigations do not fully cover the risks expressed in the incident report, then it will either suggest a new section to add to an existing policy, or provide a whole new policy or procedure. You will see this as a mitigation that will look a bit like this:

You'll notice the action buttons being replaced with just one button to apply the suggested policy amendment. Below that you will see a portion (preview) of the text that will be added to the policy. You can click the button to apply the changes in draft, after which you can make further edits before publishing. It will then replace the mitigation in this list with a mitigation like the ones above, with an explanation of how that new amendment now mitigates the policy.